How does ChatGPT score in a Public Economics exam?

1. INTRODUCTION

The term "artificial intelligence" (AI) is used to describe technologies that are capable of performing tasks or functions that would usually require human intelligence or sensory abilities. This branch of computing science is concerned with the development of software algorithms and techniques that enable computers and machines to simulate human perception and decision-making processes, thereby enabling them to successfully complete tasks. In essence, AI is a technology that enables the construction of systems capable of thinking and acting in a manner analogous to humans, with the capacity to achieve defined objectives.

As in all economic and social areas, the advent of AI will have profound implications for higher education. The application of AI to education (AIEd) has been hailed as one of the most significant developments of the century[1], with the capacity to transform the educational landscape and influence the role of all involved stakeholders[2]. Generative artificial intelligence algorithms with the ability to produce coherent and contextually relevant texts represents an important milestone in this process. The long-term implications of cognitive automation driven by generative artificial intelligence extend beyond the field of education, with a revolutionary impact on research in most scientific disciplines. [3]

The objective of this paper is to assess the potential contribution of generative artificial intelligence to the teaching of Public Economics with a particular focus on the efficiency with which it can assist teachers in preparing lecture, class, and seminars materials. To this end, the paper assesses the performance of ChatGPT-3.5 by OpenAI in solving an undergraduate Public Economics exam. This study builds upon several previous papers that have demonstrated the ability of versions of ChatGPT to pass standardised tests in various fields. These include mathematics,[4] medicine,[5] law,[6] physics,[7] and economics.[8]

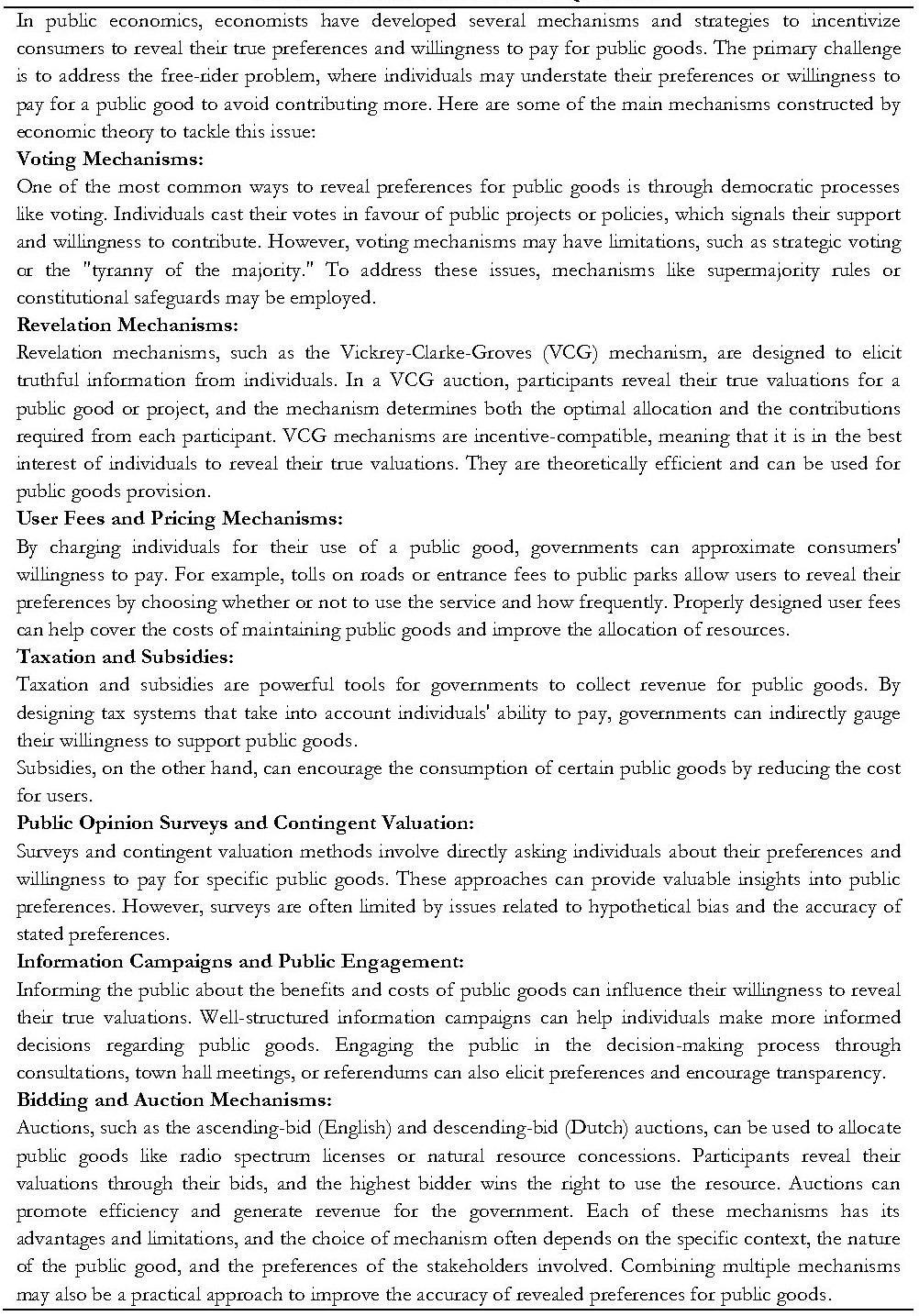

The algorithm was presented with ten theoretical questions and four practical exercises. The ChatGPT-3.5 version achieved a grade of B- in accordance with the selected evaluation criteria.

The remainder of the article is structured as follows: Section 2 examines the promises and challenges of the development of artificial intelligence in education. Section 3 addresses the implications of the emergence of generative AI. Section 4 outlines the methodology employed. Section 5 presents the results obtained. Appendix A shows the answers given by the algorithm. Appendix B provides a rationale for the scores given.

2. PROMISES AND CHALLENGUES OF ARTIFICAL INTELLIGENCE IN EDUCATION

Today it is almost impossible to think about the future of the education industry without considering the impact of AI. There is a consensus that AI will transform the industry by changing access to knowledge, teaching tools, approaches to learning and even the way teachers are trained[9]. AIEd refers to the use of AI for learning, assessment of learning and other fundamental educational purposes, including management and administration. Several areas of educational practice have been penetrated by AI, including non-teaching tasks as timetabling, resource allocation, student tracking, or providing of reports on students to their parents/guardians.[10]

The integration of AI into various tasks in this industry is expected to result in an increasing complementarity between machine and human performance. Algorithms can be of significant utility in the provision of basic content and for the expeditious assessment of essays and tests. In this context, teaching assistants may be tasked with monitoring the grades assigned by AI algorithms and providing the human touch needed to motivate students and address non-academic learning issues. Experienced educators can concentrate on more complex pedagogical duties, such as devising novel instructional methodologies, offering feedback on oral and written communication, and cultivating an achievement-oriented classroom environment.[11]

With regard to the direct interaction between IA algorithms and learners, it is worth mentioning a number of relevant arguments and assertions:

- The utilisation of AIEd may facilitate a deeper comprehension of students’ learning processes and enhance their learning experiences.

- It is possible to utilise IA algorithms effectively in the context of student profiling for the purposes of admission and retention.

- In the context of Intelligent Tutoring Systems (ITS), educational robots are being employed in a variety of roles, including that of a teaching assistant, peer and co-learner, and companion. Furthermore, they are a fundamental component of some learning platforms.[12]

- ITS-type applications are employed both as the primary mode of instruction and integrated into teacher-led courses.[13]

- Artificial intelligence (AI)-based predictive analytics algorithms can also assist in the identification of students who are at risk of not completing or passing their course. This allows universities to implement appropriate intervention strategies. The field of learning analytics employs the use of data mining techniques to identify patterns within large data sets, including those generated on the internet.

- The IEAd can provide just-in-time assessments that can be useful in facilitating and accelerating relevant adaptations of learning systems, with the aim of improving student performance and ensuring that curricula are aligned with the educational policies.[14]

- The utilisation of AIEd applications has the potential to enhance the efficacy of educational processes, as it can facilitate the expeditious attainment of specified levels of proficiency by students.[15]

- AIEd can facilitate the development of personalised learning systems. Given the diversity of learners in terms of their strengths and needs, AI-based adaptive learning platforms can be employed to enhance the learning process.[16]

- Automated AI-based Essay Scoring Systems can assess and provide feedback to students, while helping to manage teacher workload.[17]

- The use of technology-based algorithms in the field of facial recognition has become a valuable tool in the proctoring of online examinations, as well as in the analysis of student behaviour. [18]

- AIEd algorithms can also be used to facilitate connections between students and between students and their teachers. The consequence of this is an enhanced efficiency in the process of learning.[19]

There is a growing concern across society about the potential impact of the increasing use of artificial intelligence. Education is no exception to this trend. Among the main threats and vulnerabilities to the use of AI, the following have been highlighted:

- The widespread use of AI applications can have a major impact on the education labour market. AI already has the capacity to replace many administrative and teaching assistants in higher education.[20] Artificial intelligence is also forcing a rethink of the role of the teacher, and even to consider the partial replacement of teachers by virtual teacher-bots.[21]

- The implementation of AI techniques in education could potentially have a detrimental impact on the relationship between teachers and students. It is essential to investigate AI approaches that empower teachers, in order to prevent the emergence of conflicting authority structures between staff, machines, companies, and students. [22]

- AIEd can result in students becoming overly reliant on online platforms and artificial teaching assistants.

- Intelligent Tutoring Systems have the potential to diminish the quality of higher education. Due to their complexity, the implemented applications may not perform as promised. Consequently, placing undue reliance on these systems could have the unintended consequence of undermining the level of excellence.

- Furthermore, it is challenging for ITS algorithms to model and adapt to student behaviours, skills, and states of mind, which are often less structured and well-defined than those of traditional problem-solving.[23]

- Confusing the appearance of intelligence with actual intelligence can lead to the mistaken belief that AI tools can do more than they can. No AI system is truly intelligent (including today's LLMs) because they don't really understand anything.[24]

- In relation to AI-based predictive analytics, staff and students have raised concerns about the potential for misinterpretation of data, constant monitoring, lack of transparency, inadequate support, and the potential to inhibit active learning.[25]

- It may be the case that the implementation of AI techniques results in an alteration of the content of programmes, leading to deviations from the intended educational policy.

- The widespread use of AI could result in an over-individualised approach to education. This would result in a lack of emotional intelligence and an inability to acquire social values, which are typically attained through conventional education.

- For AI to be integrated into the university culture, stakeholders must be receptive to its adoption. This requires reliable algorithms and a widespread perception that the negative effects of its use will be mitigated.

- The existence of black boxes represents a structural weakness of artificial intelligence systems. Sometimes, it is difficult to explain the results generated in the context of AIEd, which raises doubts about how the models make their inferences or the objective functions that they employ to evaluate educational performance.[26]

The use of AI algorithms in higher education has the potential to deliver significant advances. However, there are also a number of challenges that must be overcome. As with other areas of AI, ethical considerations are of particular importance. It is imperative that universities develop robust policies and research agendas that take into account the ethical implications of implementing AIEd systems. In this regard, the following key issues must be addressed:

- The rise of AIED has led to a proliferation of interaction logs, which has generated a significant amount of data about students. While there is still debate about what should be considered personal data, it is evident that there are significant privacy concerns about the vast majority of the data being generated.[27]

- Other areas of concern include the issue of ownership and rights over educational records, as well as consent for the use of the data.[28]

- One of the key objectives of the AIEd initiative is to enhance educational equality and narrow the attainment gap between different student groups. However, it is unclear to what extent this can be achieved, and there is a risk of perpetuating digital exclusion through algorithmic bias. Indeed, there is some debate as to whether the use of AIEd may exacerbate inequalities.[29]

- One challenge in the construction of AIED systems is the potential lack of transparency regarding the pedagogical assumptions, data on which their models are built, or the socio-cultural orientation of the curriculum.[30]

- Another concern regarding AIEd systems is the potential lack of protection for human autonomy. This is defined as the user's ability to modify the system's operation or to be excluded from it when adverse effects may occur.[31]

- The presence of ideological and cultural biases in implemented AIEd systems could, in practice, lead to changes in programmes that define what is important to know and how students should learn.[32]

On another note, given the increasing dominance of AI in cognitive tasks in education, it is not unexpected that the question has arisen as to whether universities should change their methods. There is a consensus that not only mastery of content, but also the acquisition of non-cognitive skills play an important role in students' academic and future career outcomes. Innovations in AI can adapt the classroom experience, allowing teachers to focus more on helping students develop these important skills.[33] In light of the future employability of their students, should universities focus their efforts on developing only human skills? Should universities pay less attention to the development of skills aimed at performing tasks that AI can do (even better than humans)? And if this is the case, one wonders what the uniquely human skills will be in the near future.

3. THE EMERGENCE OF GENERATIVE AI: DOES IT REPRESENT A CHANGE?

Generative artificial intelligence (GAI) systems can produce text, images, video, music, and other types of content, including machine code, as well as performing translations. One such system is the Generative Pre-trained Transformer (GPT).[34] GPT's strength lies in its ability to generate coherent and contextually relevant text in various natural language processing tasks, including language translation, text generation, and text completion. Using natural language processing (NLP) and a wealth of publicly available digital data, GPT models can read and produce human-like text in multiple languages and demonstrate creativity by writing anything from a paragraph to an entire article convincingly on countless topics.[35]

Currently the versions of OpenAI are the most popular LLM on the market. There is a free version based on the Chat-GPT-3.5 model and a paid version called Chat-GPT-4. ChatGPT-4's public launch on 30 November 2022 has prompted reflection on the impact of generative pre-trained transformer systems on education.[36] Other chat engines include Microsoft's Bing (also based on GPT-3.5/4) which can browse the Internet in real time. Google's Bard, which is based on its PaLM-2 Bison model, is comparable to Bing in that it can search the web to include real-time information in its responses to user queries. It offers functionality at a similar level to ChatGPT-3.5. Other AI-based chat tools currently available on the market include Anthropic's Claude 2 and Meta's LlaMA 2 among others.

Although there is consensus that these systems have the capacity to transform current educational practice,[37] the educator community has expressed mixed feelings about their extraordinary ability to perform complex tasks. Those who are optimistic about the impact of GPT models argue that they have the potential to improve the quality of some of the various AI-based educational systems, such as those related to Personalized Tutoring, Automated Essay Grading, Interactive Learning, or Adaptive Learning. On the contrary, those who are skeptical about the prospect of using these models for educational purposes argue that their generalized use could lead to the appropriation of intellectual property and enable opportunistic students to engage in academic misconduct such as cheating and fraud. It has also been argued that this system does not solve the problem of the lack of human interaction that is common to algorithmic-based tutoring systems. They also remind us that GPT models (which are simply based on statistical patterns) still show a very limited understanding (if any at all). Similarly, a dialogue system based on GPT models has shown limited ability to generate contextually appropriate responses in a conversation and to personalize instruction. Furthermore, it has been pointed out that GPT-based tutoring systems still lack the ability to provide explanations adapted to the students' needs and, worse still, sometimes provide incorrect, inappropriate, or irrelevant answers (especially when the training data is not sufficiently relevant to the area being addressed). Finally, it has been argued that GPT does not solve the problem of biased results (as it still depends on the quality of training data).

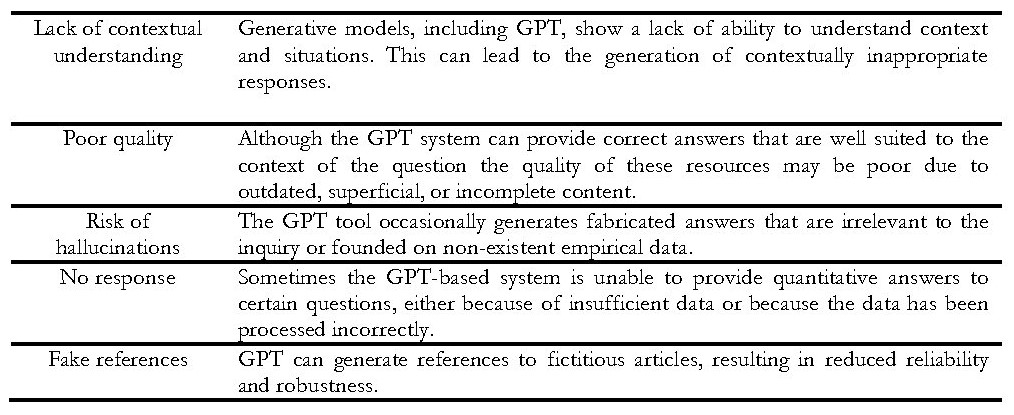

There are essentially five types of potential deficiency that have been identified in the academic responses provided by the GPT, as shown in Table 1.

Table 1. Potential shortcomings in the responses provided by GPT

The objective of this article is not to provide information about whether a specific version of ChatGPT can pass a Public Economics examination. Instead, the aim is to utilise the insights gained from its responses to evaluate four key questions:

- Can teachers utilise this tool to enhance their efficiency when preparing material for lectures, exercises and seminars?

- How should the role of essays and non-classroom exams be reviewed in the context of IA tools?

- Can teachers rely on this AI tool for tutoring?

- Should students be encouraged to use AI-based chat in their learning process so that their ability to formulate prompts is improved?

One of the key responsibilities of a teacher is to prepare practical exercises, suggest essays, and correct students' work. If there is sufficient confidence in the capabilities of tools such as AI-based chat, their use should result in a reduction in the teaching load for these tasks, an increase in the quality and variety of materials, and an increase in the time available for face-to-face tutoring.

It has been demonstrated that if a question is entered into an AI-based chat room, an essay will be generated in a matter of seconds. This raises the question of whether the traditional approach to university writing is now obsolete[38]. This is not necessarily the case. It is evident that essays will continue to be an integral component of students' academic work. However, the advent of AI-based chat rooms necessitates a re-evaluation of the nature of the questions posed. In light of these considerations, it is evident that the level of complexity of questions should be significantly higher. The objective of the tests is not merely to assess students' writing abilities, but also to ascertain their capacity to integrate theoretical concepts, resolve apparent contradictions, and apply their acquired knowledge to practical scenarios.

In the context of essay writing assignments and open-book examinations, the primary objective of monitoring has traditionally been to ensure that students are the authors of their work. The advent of AI chatbots has introduced a new risk factor into the equation, namely the possibility of cheating. Nevertheless, the development of AI-based software also enables educators to utilise plagiarism detection software to ascertain whether submitted material has been copied. [39]

It has been argued that the use of AI tools can make students too dependent on technology and prevent them from developing their own problem-solving skills, while at the same time stifling their creativity. However, we should not forget that students will inevitably enter a world where AI is commonplace.[40] According to the human capital theory developed by Gary Becker in the 1960s, investing in the education and training of individuals can help increase the productivity of workers. Given that increased productivity is precisely the most expected outcome of the use of artificial intelligence tools, training university students in AI systems seems a relevant goal. And this includes the acquisition of skills in how best to query AI-based chats. The critical analysis of texts generated by artificial intelligence (AI) tools can facilitate the enhancement of writing skills. Consequently, in addition to the continued requirement for students to write essays, a potential aspect of the university education process could be the evaluation of not only the outcome, but also the questions asked and the iterative process followed in the use of text-generative AI systems. As with any computer system, the quality of the output of an AI system depends on the quality of the input data. The process of developing effective instructions for generative AI systems (called 'prompt engineering') should be considered as part of the learning process. To improve the quality of content generated by LLM systems, it is essential to provide them not only with clear questions, but also with contextual and stylistic instructions. For optimal LLM results, it is recommended to be iterative and patient. When using GPT software to teach economics, teachers can think of this AI-based tool not as a database, but as a large collection of economists, historians and scientists to whom questions can be posed.[41]

The utilisation of artificial intelligence (AI) tools is set to become ubiquitous across the entire spectrum of professional life. Today's university students will soon enter a world of work in which the way they use AI tools will determine not only their productivity but will also affect the ethical standards of their work. The utilisation of AI tools in the educational setting represents an ideal opportunity to educate students about the ethical implications of such technology. All teachers, regardless of their subject area, have the potential to integrate these objectives into their educational methodology.

Intelligent tutoring systems have the potential to tailor lessons to the specific needs of learners. By offering learning experiences that are tailored to the unique needs and interests of each student, personalised learning platforms can enhance student engagement and motivation.[42] However, in the current state of ChatGPT, educators may be reluctant to use this artificial intelligence tool in tutoring tasks because of the possibility that it may provide answers that contain hallucinations. While human-written texts show a strong correlation between authoritative style and reality-based content, LLM-generated texts can sometimes appear to be of high quality in style but lacking in reliability in content. [43] It is clear that this risk exists, but the use of AI-based chatbots for academic tasks can help students develop critical thinking skills and the ability to be alert to failures in the output of AI-based systems. Such abilities will prove invaluable in their future careers.

4. METHODOLOGY

The methodology employed involved posing a series of questions to Chat GPT-3.5[44] on topics of the subject Public Economics. This course is usually taught as an elective in the second or third year of the Economics degree programme. The Public Economics course currently consists of analysing various decisions involving the public sector and their consequences using microeconomic tools. It could be said that it is a course in microeconomics applied to the field of taxation and public spending. The usual skill requirements for Public Economics include courses in Mathematics and Microeconomics. In some universities this subject is taught under names such as Economics of the Public Sector or Public Finance. Other universities offer part of the subject content under courses called Economic Policy Analysis, Economics of Tax policy, Economics of Inequility, etc. Regarding the syllabus, the subject of Public Economics typically covers topics such as Theories of Public Sector, Market failures (as Externalities, Public goods or Imperfect competition), Public Choice and voting, Commodity taxation, Income taxation, Optimal taxation, Tax evasion, Fiscal Federalism, Redistribution policies, Public expenditure, and Fiscal Policies among others. Some of the most commonly used textbooks at the undergraduate level include Stiglitz and Rosengard (2015), Kennedy (2012), Gruber (2005), Hyman (2005), or Rosen and Gayer (1995). Texts by Hindriks and Myles (2013) and Myles (2005) are usually used for intermediate level. In the Master of Science in Economics and PhD in Economics degrees, Atkinson and Stiglitz (2015) and Auerbach et al. (2013) are commonly used.

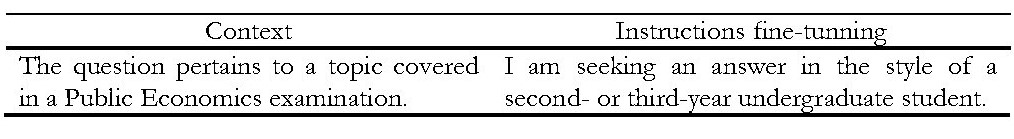

The test designed consists of ten theoretical questions and four exercises on a range of Public Finance topics. The questions were posed to ChatGPT-3.5 during the month of November 2023. The prompts that are common to all questions are presented in Table 2.

Table 2. Common part of prompts

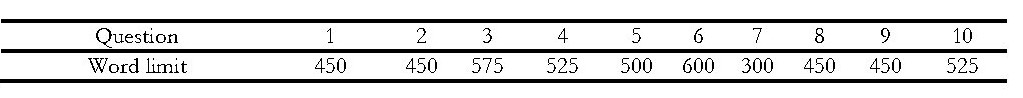

In the case of a human examinee, it would normally be requested that the theoretical questions posed be answered within an average response time of 15-20 minutes. The number of words typically written by an economics student depends on several factors, such as the student's writing speed, familiarity with the subject and the type of questions asked but is generally between 400 and 600 words. Taking this into account, an additional instruction was incorporated into the prompt provided to ChatGPT-3.5, specifying a maximum number of words for each question. This information is presented in Table 3.

Table 3. Instructions in relation to the length of answers

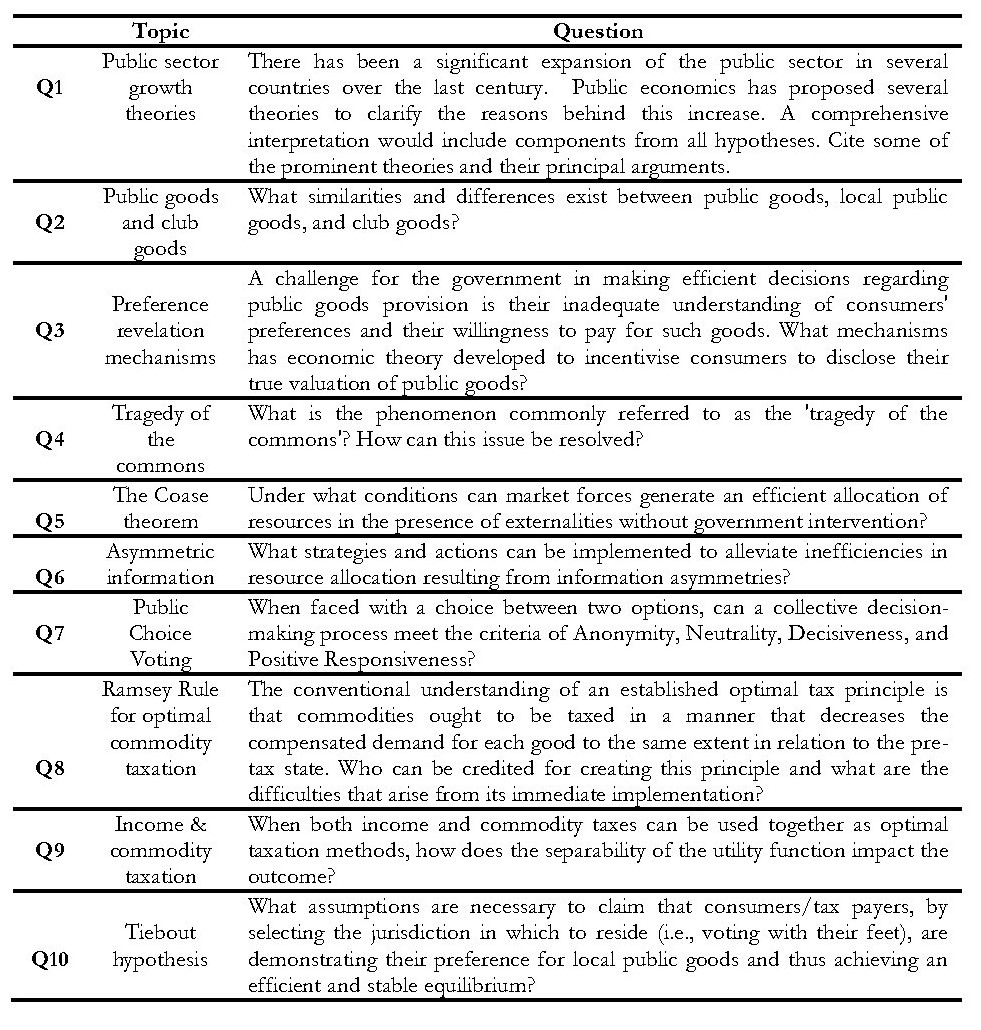

The prompts for the theoretical questions are presented in Table 4.

Table 4. Theoretical questions

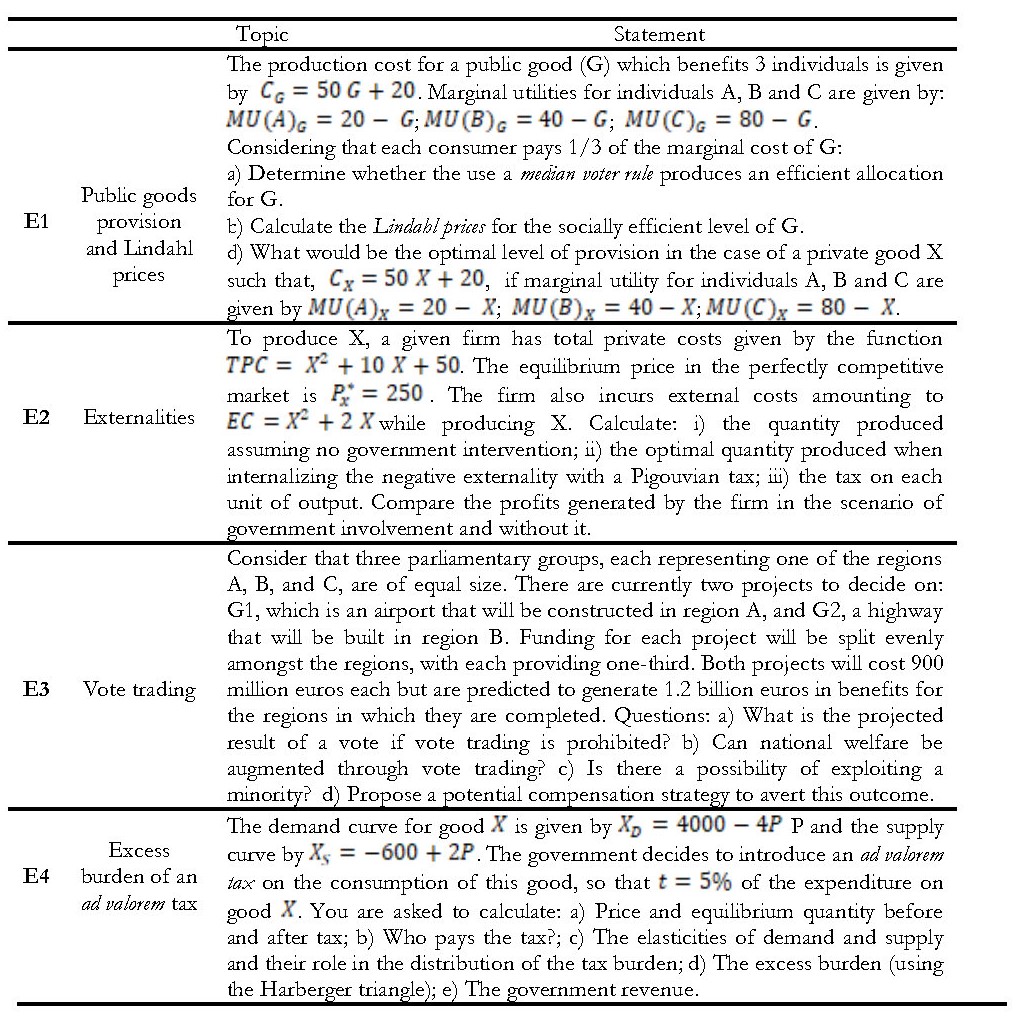

The questions of the exercises are shown in table 5.

Table 5. Exercises

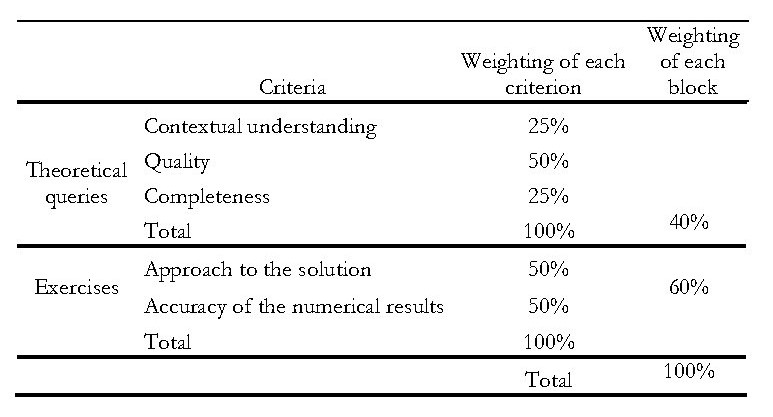

The responses offered by ChatGPT-3.5 to the queries and exercises are detailed in Appendix A. The answers were scored according to the criteria shown in Table 6:

Table 6: Assessment criteria

5. RESULTS

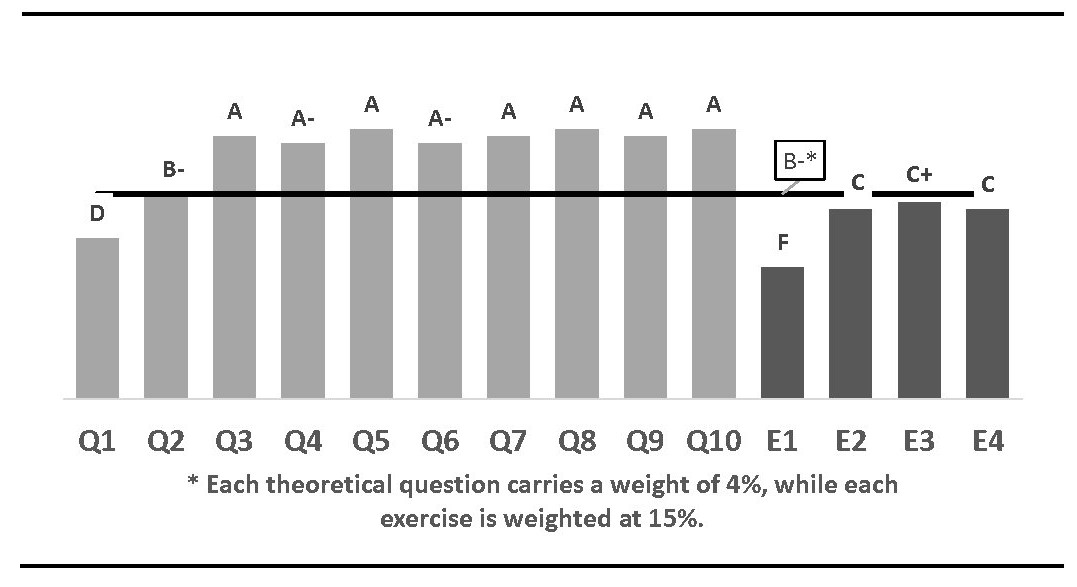

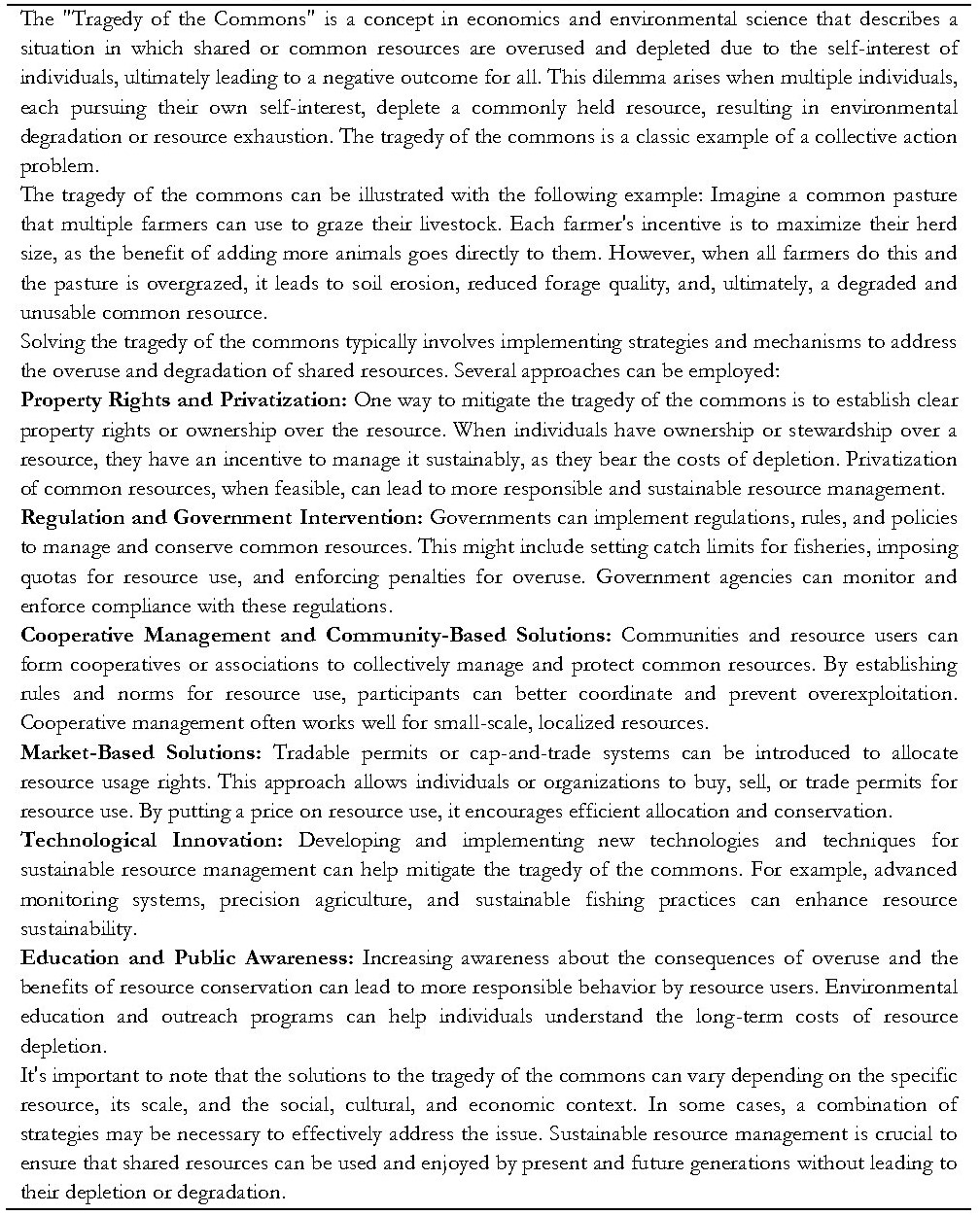

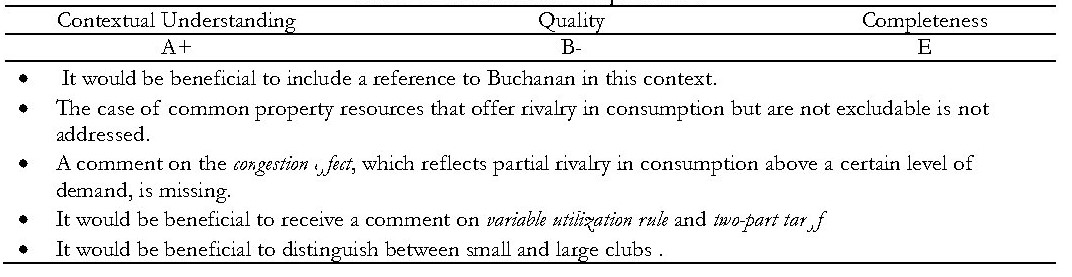

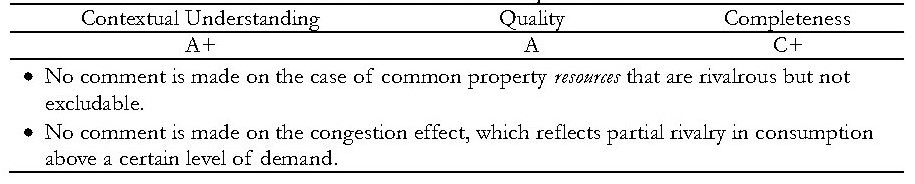

The provided answers demonstrated an exemplary fit to the theoretical questions in all instances. The quality of the responses was generally of a high standard, with scores ranging from A to A+, except for the first two questions. In the initial question pertaining to the explanatory theories of public expenditure growth, several hypotheses were omitted, including those related to the displacement effect, fiscal illusion, government agency, rent-seeking, budget-setting, and unbalanced growth. In the second question, regarding public goods and club goods, the text would require consideration of common property resources that display consumption rivalry but cannot be excluded. Furthermore, a comment on the congestion effect, which highlights the partial rivalry of consumption from a given level of demand, would have been appropriate. Additionally, commentary on the variable usage-based surcharge rule and the two-part tariff would be valuable. And finally, it would be beneficial to differentiate between small and large clubs.

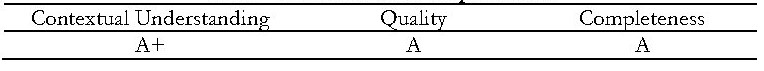

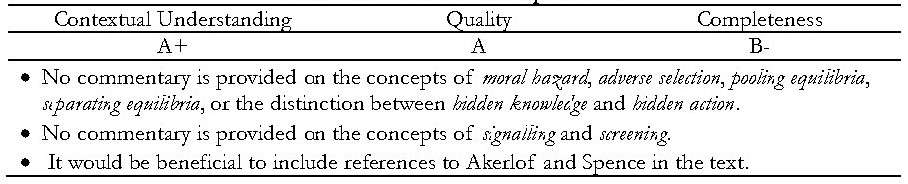

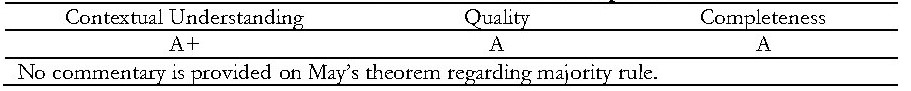

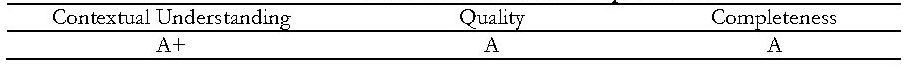

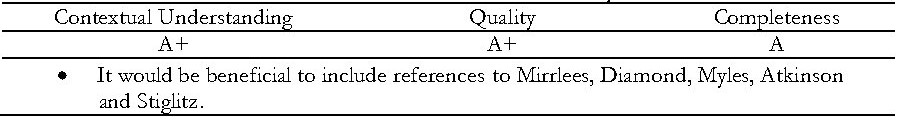

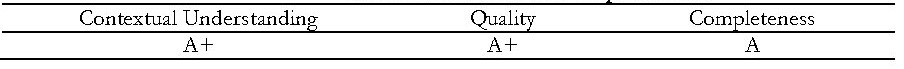

The explanations justifying the assessment are given in Tables B1 to B10 in Appendix B.

Figure 1 shows how the theoretical questions scored according to the chosen criteria.

Figure 1. Marks for theoretical questions

Source: own elaboration.

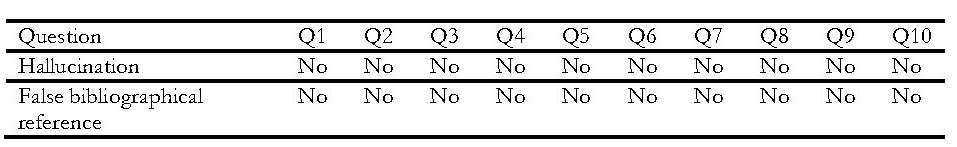

In addition to the examination mark, the extent to which answers contained hallucinations and/or false bibliographical references was also assessed. Note that the prompts deliberately did not require references to be given. The results were very encouraging in this respect. See Table 7.

Table 7. Was the response accompanied by hallucinatory content or false references?

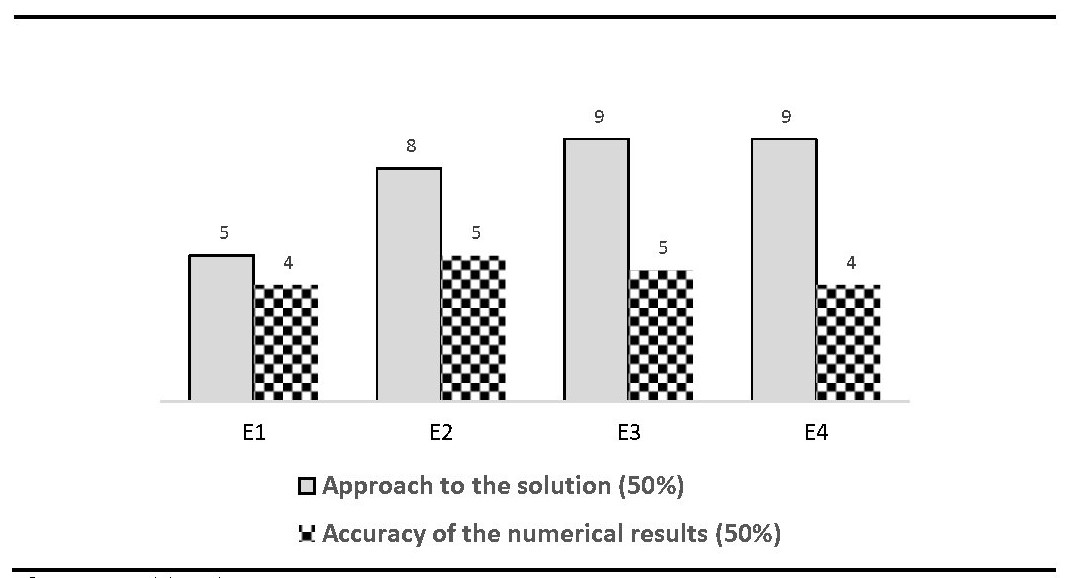

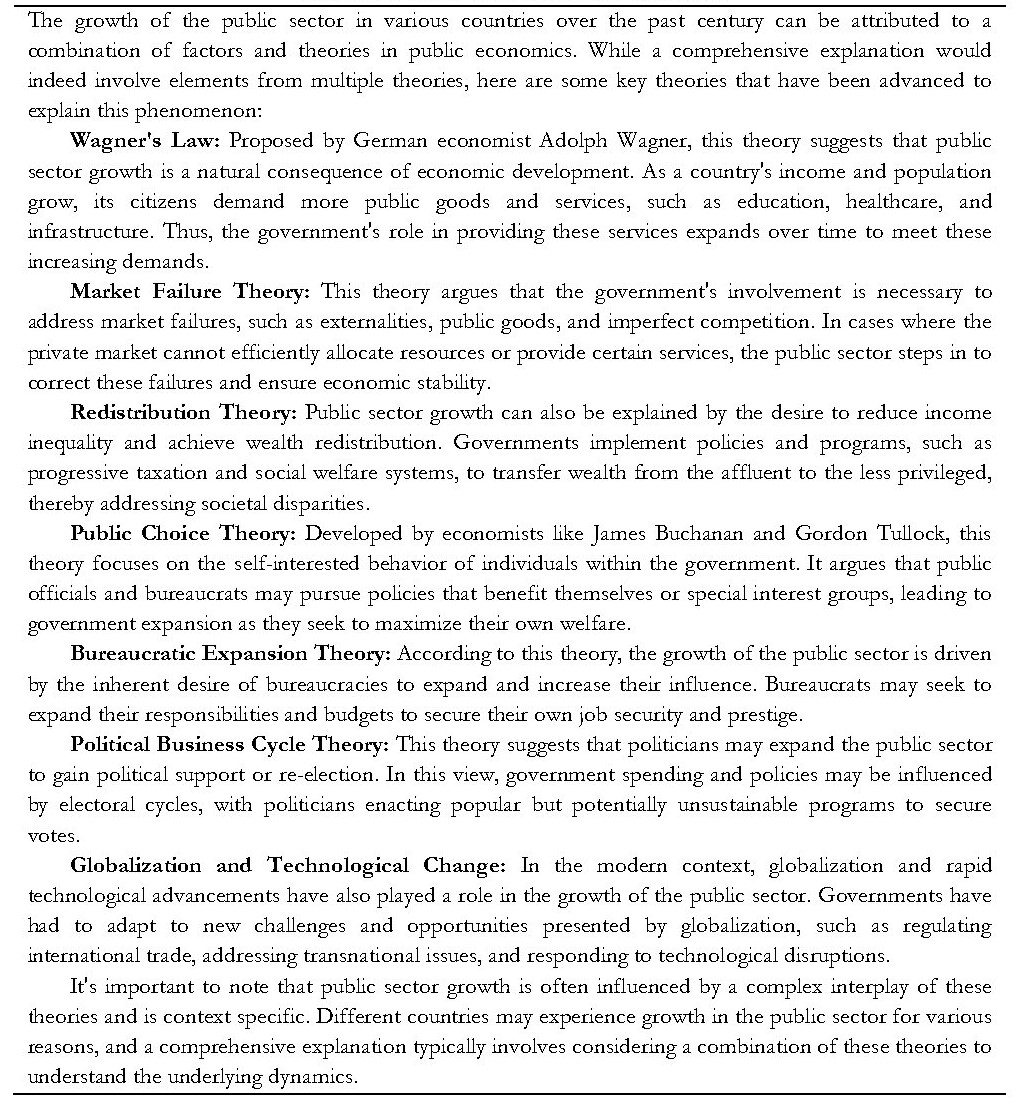

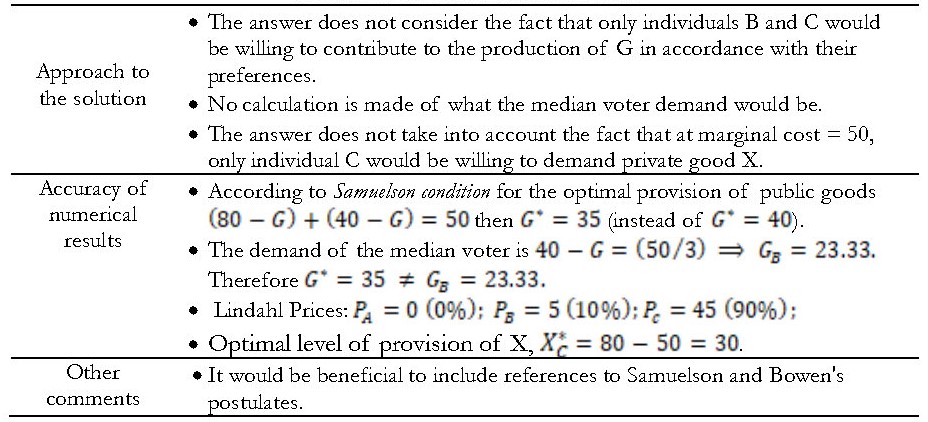

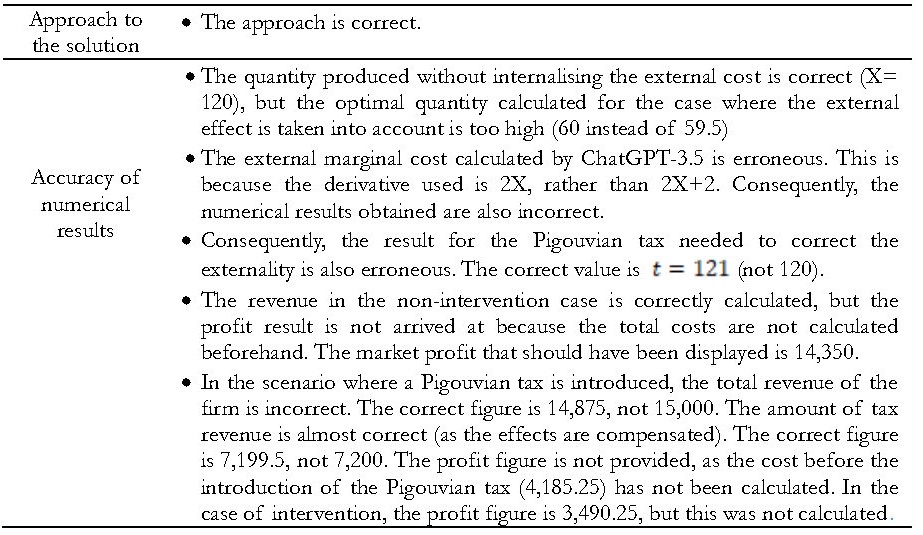

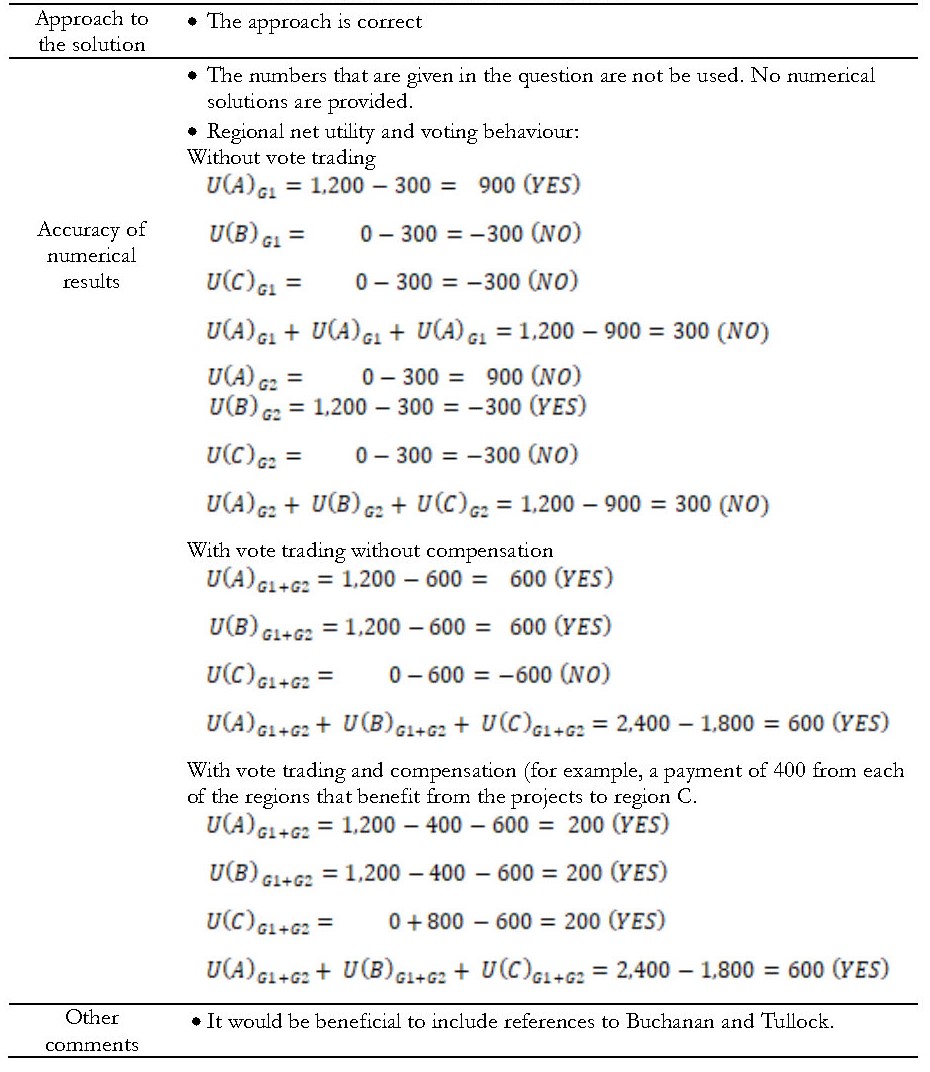

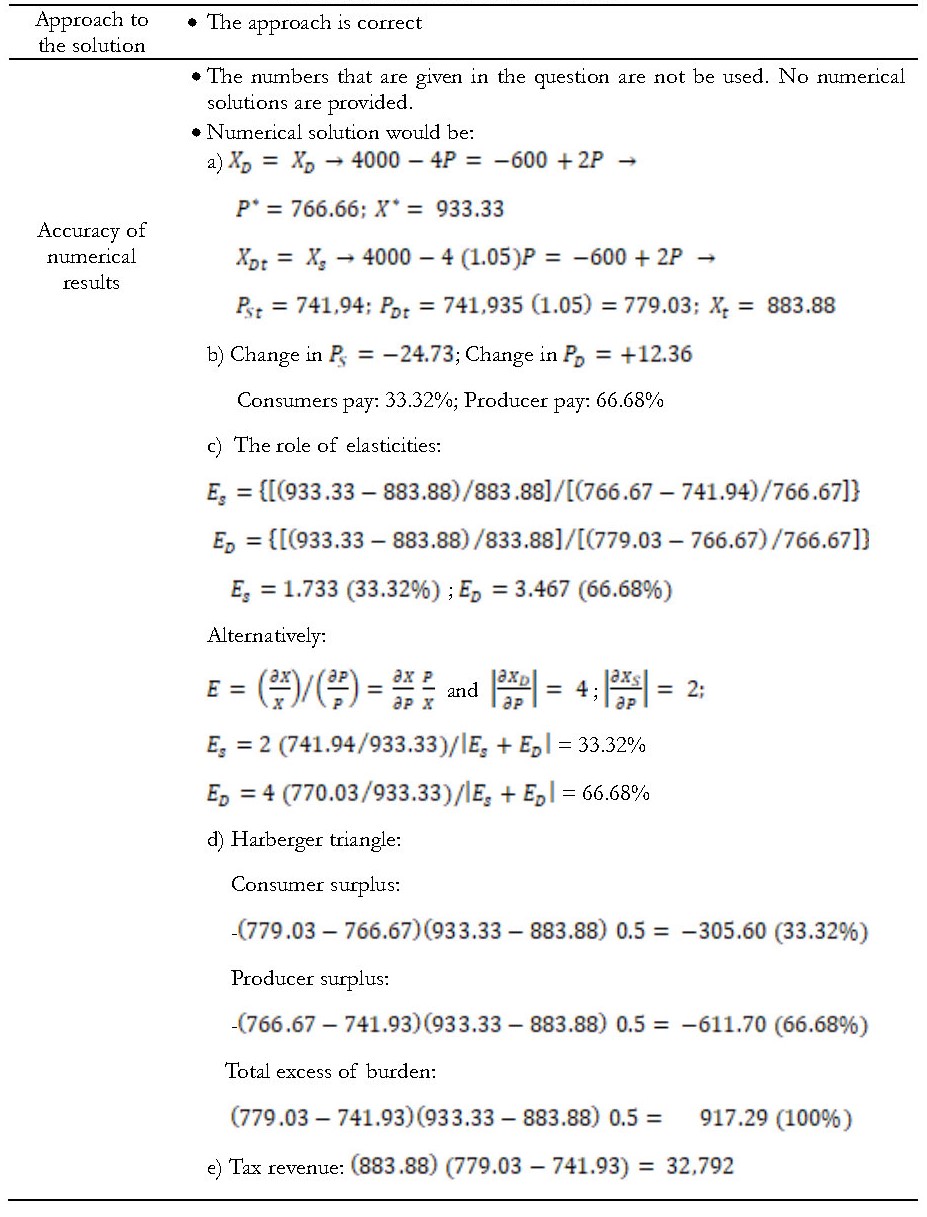

Regarding the Exercises, the distribution of marks was more heterogeneous. In general, the approach taken by ChatGPT-3.5 to address the Exercises was effective. However, in Exercise 1 on the optimal provision of public goods, the answer failed to take into account the fact that, according to the correctly revealed preferences, only two of the three individuals were willing to contribute to the production of the public good. Furthermore, the answer failed to consider the fact that, at the given marginal cost that determines the price of the private good, demand arises solely from one of the consumers. With regard to the numerical results, ChatGPT-3.5 provided an answer in two out of the four exercises (Exercises 1 and 2). In Exercise 1, some of the results were not entirely accurate due to an error in the approach previously outlined. In Exercise 2 the results were also erroneous due to an incorrect marginal cost derivative. Furthermore, not all the requisites’ results were obtained. In Exercises 3 and 4, for reasons that are unclear ChatGPT-3.5 did not utilise the data provided to generate the numerical results However the approach employed was correct in both cases.

Figure 2. Marks for Exercises

Source: own elaboration.

The explanations justifying the assessment are given in Tables B11 to B14 in Appendix B.

The weighted result of ChatGPT-3 responses, as shown in Figure 3 is a grade of B-.

Figure 3: Scoring of ChatGPT-3 responses

6. CONCLUSIONS

ChatGPT-3.5 displays a good level of competence in the field of Public economics at the undergraduate level, to the extent that it is able to pass an exam with a grade of ‘B-’. The algorithm was able to provide accurate and contextually appropriate responses to all theoretical questions. In the context of solving exercises, the results were less encouraging. Although ChatGPT-3.5 demonstrated an ability to provide correct approaches, the numerical results showed varying levels of quality. The algorithm demonstrated a consistent ability to interpret the questions posed and correctly apply the mathematical formulae. Nevertheless, in two exercises, the algorithm failed to utilise the provided data to generate results.

It has been argued that one of the current disadvantages of utilising the AI tool is that it lacks the capacity to comprehend context, read tone and emotions, address complex topics, or create personalised lesson plans. It has been pointed out that LLM models exhibit limitations in their ability to perform tasks requiring complex, multi-step reasoning. [45] In this context, it is accurate to state that the questions included in the examination were of a straightforward nature. It is possible that this is the reason for the satisfactory outcome.

The utilisation of generative AI can be a valuable asset in the automation of minor tasks, commonly referred to as ‘micro-tasks’, that lectures and teaching assistants have to perform in the preparation of their lectures and classes. Based on the ability of ChatGPT-3.5 to deal with basic questions of Public Economics, it could be argued that the integration of generative AI into teachers' operational procedures can significantly improve their productivity.

In a similar vein, the level of Public Economics demonstrated by ChatGPT-3.5 at the undergraduate level allows us to conclude that teachers can use this tool to gamify the classroom and propose games that can be solved individually or in teams. This strategy has the potential to foster students' engagement with the subject matter.

One aspect of the research into the use of AI tools is the extent to which they can assist teachers in implementing more personalised education, allowing students to learn at their own pace. The findings of this study indicate that the utilisation of ChatGPT-3.5 capabilities in the domain of Public Finance enables the expedient development of instructional materials that facilitate a more personalised educational experience.

It is the responsibility of contemporary educators to prepare students for the novel work environment that is characterised by the proliferation of artificial intelligence use. In the specific case of Economics education, the consideration of current events that can be analysed using ChatGPT-3.5 can assist students in developing a better understanding of the relationship between theoretical concepts and the real-world context of the global economy.

The utility of AI-based assistants in providing responses to exercises and essays in the field of Public Economics (as in many other scientific disciplines) will continue to expand in the future. This will permit educators to concentrate on tasks where they exhibit comparative advantage when preparing their materials (such as questioning, evaluating and reviewing the content generated by these algorithms). In this way, they will have more time to support students in more complex areas of the subject.

The development of students' comprehensive intellectual abilities, including critical thinking, fluent writing, sound logical reasoning, precise linguistic expression and agile thinking, has consistently been regarded as the fundamental mission of education. The advent of AI-based tools in our daily lives does not negate this mission; rather, it compels educators to reinforce their students' critical thinking abilities and to cultivate their creativity as a means of differentiating themselves from robots.

Notas

[1] See Becker et al. (2018).

[2] See Nguyen et al. (2023).

[3] See Korinek (2023).

[4] See Leswing (2023). Gilson et al. (2023).

[5] See Gilson et al. (2023).

[6] See Choi et al. (2022) and Terwiesch (2023).

[7] See West (2023).

[8] See Geerling et al. (2023) or Trent (2023). OpenAI (2023) reports on the evaluation of a number of tests originally designed for humans and solved by GPT-4, including tests in Microeconomics and Macroeconomics.

[9] See Ungerer and Slade (2022).

[10] See Nguyen et al. (2023).

[11] See Arnett (2016) and Selwyn (2019).

[12] See Pandey and Gelin (2017).

[13] See Murphy (2019).

[14] See Aguiar et al. (2015), Lakkaraju et al. (2015), Luckin and Holmes (2016), Murphy (2019), and The Institute for Ethical AI in Education (2020).

[15] See Du Boulay (1998).

[16] See The Institute for Ethical AI in Education (2020), Klašnja-Milićević and Ivanović (2021) or Tapalova et al. (2022).

[17] See Foltz et al. (2013), Murphy (2019), Swauger (2020) and The Institute for Ethical AI in Education (2020).

[18] See The Institute for Ethical AI in Education (2020).

[19] See Goksel and Bozkurt (2019).

[20] See Popenici and Kerr (2017).

[21] See Bayne (2015), Knox (2016), and Selwyn (2019).

[22] See Holmes (2023).

[23] See Conati (2009).

[24] See Holmes (2023).

[25] See Braunack-Mayer et al. (2020).

[26] See Porayska-Pomsta and Holmes (2022).

[27] See Marković et al. (2019), Braunack-Mayer et al. (2020).

[28] See Marković et al. (2019) and Holmes et al. (2021) or Ungerer et al. (2022),

[29] See Nichols and Holmes (2018), Knox et al. (2019b), Vincent-Lancrin and Van der Vlies (2020), or Holstein and Doroudi (2021), Ungerer et al. (2022), Hong et al. (2022).

[30] See Porayska-Pomsta and Holmes (2022) or Nguyen et al. (2023).

[31] See Porayska-Pomsta and Holmes (2022).

[32] See Mohammed and Nell’Watson (2019) and Madaio et al. (2022).

[33] See Arnett (2016).

[34] Other GAI systems are the following: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM); Transformers, Bayesian Networks, Conditional Language Models.

[35] See Aydin and Karaarslan (2023).

[36] See Holmes (2023).

[37] See Baidoo-Anu and Owusu-Ansah (2023).

[38] See McMurtrie (2022).

[39] See Farazouli et al. (2024) and Dawson (2024).

[40] See Pickell and Doak (2023).

[41] See Cowen and Tabarrok (2023).

[42] See Zhai (2022) and Adıgüzel et al. (2023).

[43] See Iqbal et al. (2022).

[44] Note that ChatGPT-3.5 does not incorporate the latest developments and references as it was trained on data only up until September 2021.

[45] See Dziri et al. (2023).

References

Adıgüzel, T., Kaya, M. H., and Cansu, F. K. (2023) “Revolutionizing education with AI: Exploring the transformative potential of ChatGPT,” Contemporary Educational Technology.

Aguiar, E., Lakkaraju, H, Bhanpuri, N., Miller, D., Yuhas, B., and Addison, K. L. (2015).“Who, When, and Why: A Machine Learning Approach to Prioritizing Students at Risk of Not Graduating High School on Time,” in Association for Computing Machinery, LAK ’15, Proceedings of the Fifth International Conference on Learning Analytics and Knowledge, Poughkeepsie, N.Y.: 93–102.

Akgun, S. and Greenhow, C. (2022). “Artificial intelligence in education: Addressing ethical challenges in K-12 settings,”. AI Ethics 2: 431–440.

Aoun, J. E. (2017): Robot-proof: Higher education in the age of artificial intelligence. MIT Press.

Arnett, T. (2016) Teaching in the Machine Age: How innovation can make bad teachers good and good teachers better. Christensen Institute.

Atkinson, A. B. and Stiglitz, J. E. (2015). Lectures on public economics: Updated edition. Princeton University Press.

Auerbach, A. J., Chetty, R., Feldstein, M., and Saez, E. (Eds.). (2013). Handbook of Public Economics. Newnes.

Aydın, Ö. and Karaarslan, E. (2023). “Is ChatGPT leading generative AI? What is beyond expectations?” Academic Platform Journal of Engineering and Smart Systems.

Baidoo-Anu, D. and Owusu-Ansah, L. (2023). “Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning,” Journal of AI, 7 (1): 52-62.

Baker, R. S. (2016). “Stupid tutoring systems, intelligent humans,” International Journal of Artificial Intelligence in Education, 26: 600-614.

Bearman, M., Ryan, J., and Ajjawi, R. (2023). “Discourses of artificial intelligence in higher education: A critical literature review,” Higher Education, 86(2): 369-385.

Becker, S. A., Brown, M., Dahlstrom, E., Davis, A., DePaul, K., Diaz, V., and Pomerantz, J. (2018). NMC Horizon Report: 2018 Higher Education Edition. Educause.

Braunack-Mayer, A. J., Street, J. M., Tooher, R., Feng, X., and Scharling-Gamba, K. (2020): “Student and staff perspectives on the use of big data in the tertiary education sector: A scoping review and reflection on the ethical issues,” Review of Educational Research, 90 (6). 788-823.

Choi, J. H., Hickman, K. E., Monahan, A., and Schwarcz, D. (2022) “ChatGPT Goes to Law School,” Journal of Legal Education 387. Available at SSRN: https://ssrn.com/abstract=4335905 or http://dx.doi.org/10.2139/ssrn.4335905.

Cowen, T. and Tabarrok, A. T. (2023) “How to Learn and Teach Economics with Large Language Models, Including GPT,” GMU Working Paper in Economics 23-18.

Dawson, P., Nicola-Richmond, K. and Partridge, H. (2024) "Beyond open book versus closed book: A taxonomy of restrictions in online examinations," Assessment & Evaluation in Higher Education, 49. 2: 262-274.

Du Boulay, B. (1998). “What does the ‘AI’ in AIED buy?” IEE Colloquium on Artificial Intelligence in Educational Software. IET Digital Library.

Dziri, N., Lu, X., Sclar, M., Li, X. L., Jiang, L., Lin, B. Y., ... and Choi, Y. (2024) “Faith and fate: Limits of transformers on compositionality,” Advances in Neural Information Processing Systems, 36.

Farazouli, A., Cerratto-Pargman, T., Bolander-Laksov, K., & McGrath, C. (2024) “Hello GPT! Goodbye home examination? An exploratory study of AI chatbots impact on university teachers’ assessment practices. Assessment & Evaluation in Higher Education, 49(3), 363-375.

Foltz, P. W., Streeter, L. A., Lochbaum, K. E., and Landauer, T. K. (2013): “Implementation and applications of the intelligent essay assessor” in M. D. Shermis & J. Burstein (Eds.), Handbook of automated essay evaluation: 68–88. Routledge.

Geerling, W., Mateer, G. D., Wooten, J., and Damodaran, N. (2023) “ChatGPT has Aced the Test of Understanding in College Economics: Now What? The American Economist, 68 (2): 233-245. https://doi.org/10.1177/05694345231169654.

Gilson, A., Safranek, C. W., Huang, T., Socrates, V., Chi, L., Taylor, R. A., and Chartash, D. (2023) “How does ChatGPT perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Medical Education, 9(1), e45312. https://doi.org/10.2196/45312.

Goksel N, and Bozkurt, A. (2019). “A. Artificial intelligence in education: Current insights and future perspectives” in Handbook of Research on Learning in the Age of Transhumanism: 224–236. IGI Global.

Gruber, J. (2005). Public finance and public policy. Macmillan.

Hindriks, J., and Myles, G. D. (2013). Intermediate public economics. MIT press.

Holmes, W. (2023). “AIED—Coming of Age?” International Journal of Artificial Intelligence in Education: 1-11.

Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., ... and Koedinger, K. R. (2021). “Ethics of AI in education: Towards a community-wide framework,” International Journal of Artificial Intelligence in Education: 1-23.

Holstein, K., and Doroudi, S. (2021). “Equity and Artificial Intelligence in Education: Will “AIEd” Amplify or Alleviate Inequities in Education?” arXiv preprint.

Hong, Y., Nguyen, A., Dang, B., and Nguyen, B. P. T. (2022). “Data Ethics Framework for Artificial Intelligence in Education (AIED),” in International Conference on Advanced Learning Technologies (ICALT): 297-301. IEEE.

Hyman, D. N. (2005). Public finance: A contemporary application of theory to policy. Thomson/South Western.

Hrastinski, S., Olofsson, A.D., Arkenback, C. et al. (2019). “Critical Imaginaries and Reflections on Artificial Intelligence and Robots in Postdigital K-12 Education,” Postdigital Science and Education 1: 427–445.

Iqbal, N., Ahmed, H., and Azhar, K. A. (2022) “Exploring teachers' attitudes towards using ChatGPT”. Glob. J. Manag. Adm. Sci. 3, 97–111. doi: 10.46568/gjmas.v3i4.163.

Kennedy, M. M. J. (2012). Public finance. PHI Learning Pvt. Ltd.

Klašnja-Milićević, A. and Ivanović, M. (2021). “E-Learning personalization systems and sustainable education,” Sustainability, 13 (12): 6713.

Knox, J. (2016). “Posthumanism and the MOOC: opening the subject of digital education,” Studies in Philosophy and Education, 35: 305-320.

Knox, J., Wang, Y., and Gallagher, M. (2019a). “Introduction: AI, inclusion, and ‘everyone learning everything’,” Artificial intelligence and inclusive education: Speculative futures and emerging practices: 1-13.

Knox, J., Yu, W., and Gallagher, M. (2019b). Artificial intelligence and inclusive education. Springer Singapore.

Korinek, A. (2023) “Generative AI for economic research: Use cases and implications for economists”, Journal of Economic Literature, 61(4): 1281-1317.Lakkaraju, H., Aguiar, E., Shan, C., Miller, D., Bhanpuri, N., Ghani, R., and Addison, K. L. (2015). “A machine learning framework to identify students at risk of adverse academic outcomes” in Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining: 1909-1918.

Leswing, K. (2023). March 26). OpenAI announces GPT-4, claims it can beat 90% of humans on the SAT. CNBC. https://www.cnbc.com/2023/03/14/openai-announces-gpt-4-says-beats-90percent-of-humans-on-sat.htm.

Lin P.H., Wooders, A., Wang, J.T.Y., and Yuan W.M. (2018). “Artificial intelligence, the missing piece of online education?” IEEE Engineering Management Review, 46 (3): 25–38.

Luckin, R. and Holmes, W. (2016): Intelligence unleashed: An argument for AI in education. UCL Knowledge Lab (University College London) and Pearson.

McMutrie, B. (2023) “AI and the future of undergraduate writing,” The Chronicle of Higher Education. February 8). https://www.chronicle.com/article/ai-and-the-future-of-undergraduate-writing.

Madaio, M., Blodgett, S. L., Mayfield, E., and Dixon-Román, E. (2022). “Beyond ‘fairness’: Structural (in) justice lenses on ai for education” in The ethics of artificial intelligence in education: 203-239. Routledge.

Marković, M. G., Debeljak, S., and Kadoić, N. (2019). “Preparing students for the era of the General Data Protection Regulation (GDPR),” TEM Journal: Technology, Education, Management, Informatics, 8: 150–156.

Mohammed, P. S. and ‘Nell’Watson, E. (2019). “Towards inclusive education in the age of artificial intelligence: Perspectives, challenges, and opportunities” in Artificial Intelligence and Inclusive Education: Speculative futures and emerging practices: 17-37.

Mollick, E. R. and Mollick, L. (2023) “Using AI to Implement Effective Teaching Strategies in Classrooms: Five Strategies, Including Prompts,” https:// dx.doi.org/10.2139/ssrn.4391243.Murphy, R. F. (2019). “Artificial intelligence applications to support k–12 teachers and teaching: a review of promising applications, challenges, and risks,” Perspective: 1–20.

Myles, G. D. (2005). Public Economics. Cambridge and New York: Cambridge University Press. Persson, Mats, and Agnar Sandmo.

Nguyen, A., Ngo, H. N., Hong, Y., Dang, B., and Nguyen, B. P. T. (2023). “Ethical principles for artificial intelligence in education” in Education and Information Technologies, 28 (4): 4221-4241.

Nichols, M. and Holmes, W. (2018). “Don't do Evil: Implementing Artificial Intelligence in Universities” in EDEN Conference Proceedings, 2: 110-118.

OpenAI (2023) “GPT-4 Technical Report, ” https://arxiv.org/pdf/2303.08774.

Pickell, T. R. and Doak, B. R. (2023). Five Ideas for How Professors Can Deal with GPT-3... For Now.

Popenici, S. A., and Kerr, S. (2017). “Exploring the impact of artificial intelligence on teaching and learning in higher education,” Research and Practice in Technology Enhanced Learning, 12(1): 1-13.

Porayska-Pomsta, K., and Holmes, W. (2022). “Toward ethical AIED,” arXiv preprint.

Rosen, H. S., & Gayer, T. (1995). Public Finance, Richard D. Irwin Inc.

Selwyn, N. (2019): Should robots replace teachers? AI and the future of education. John Wiley & Sons.

Sharma, S. and Yadav, R. (2022) “Chat GPT–A technological remedy or challenge for education system”, Global Journal of Enterprise Information System, 14(4): 46-51.

Stiglitz, J. E., & Rosengard, J. K. (2015). Economics of the public sector: Fourth international student edition. WW Norton & Company.

Swauger, S. (2020). “Our bodies encoded: Algorithmic test proctoring in higher education” in J. Stommel, C. Friend, & S. M. Morris (Eds.), Critical Digital Pedagogy. Pressbooks.

Tapalova, O., Zhiyenbayeva, N., and Gura, D. (2022). “Artificial Intelligence in Education: AIEd for Personalised Learning Pathways,” The Electronic Journal of e-Learning, 20 (5):639-653.

Terwiesch, C. (2023) “Would Chat GPT3 Get a Wharton MBA?”, Pennsylvania: Mack Institute for Innovation Management at the Wharton School, University of Pennsylvania.

The Institute for Ethical AI in Education (2020). Interim report: towards a shared vision of ethical AI in education. The University of Buckingham.

Trent, C. (2023) “ChatGPT and Current Events in the Economics Classroom,” Business Education Innovation Journal, 15(1).

Ungerer, L., and Slade, S. (2022). “Ethical considerations of artificial intelligence in learning analytics in distance education contexts” in Learning Analytics in Open and Distributed Learning: Potential and Challenges: 105-120. Singapore: Springer Nature Singapore.

Vincent-Lancrin, S., and Van der Vlies, R. (2020). Trustworthy artificial intelligence (AI) in education: Promises and challenges. OECD Library.

West, C. G. (2023) “AI and the FCI: Can ChatGPT project an understanding of introductory Physics?,” arXiv preprint arXiv:2303.01067.

Zhai, X. (2022) “ChatGPT user experience: Implications for education”. Available at SSRN 4312418.

APPENDIX A

Table A1: ChatGPT-3.5 answer to Question 1

Table A2: ChatGPT-3.5 answer to Question 2

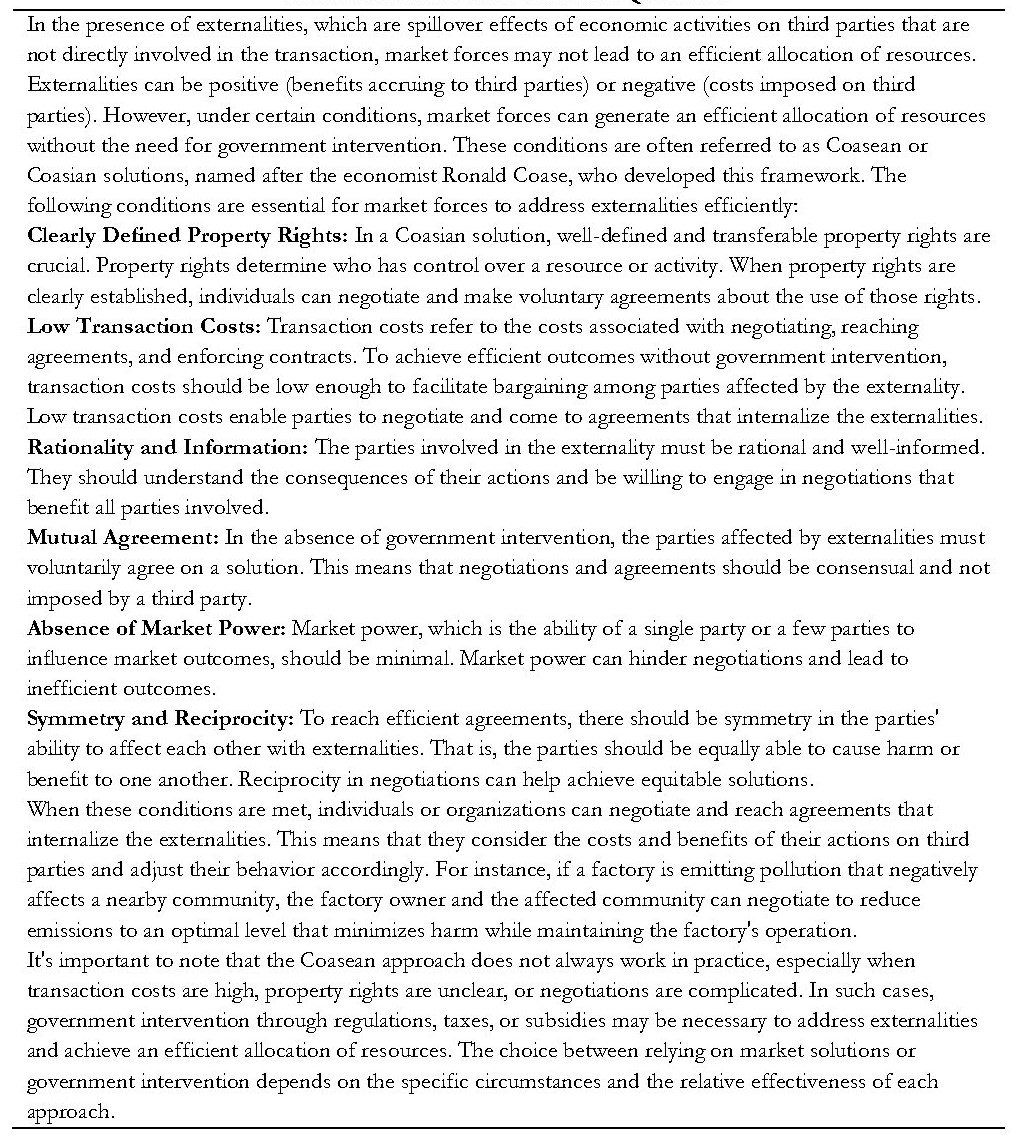

Table A3: ChatGPT-3.5 answer to Question 3

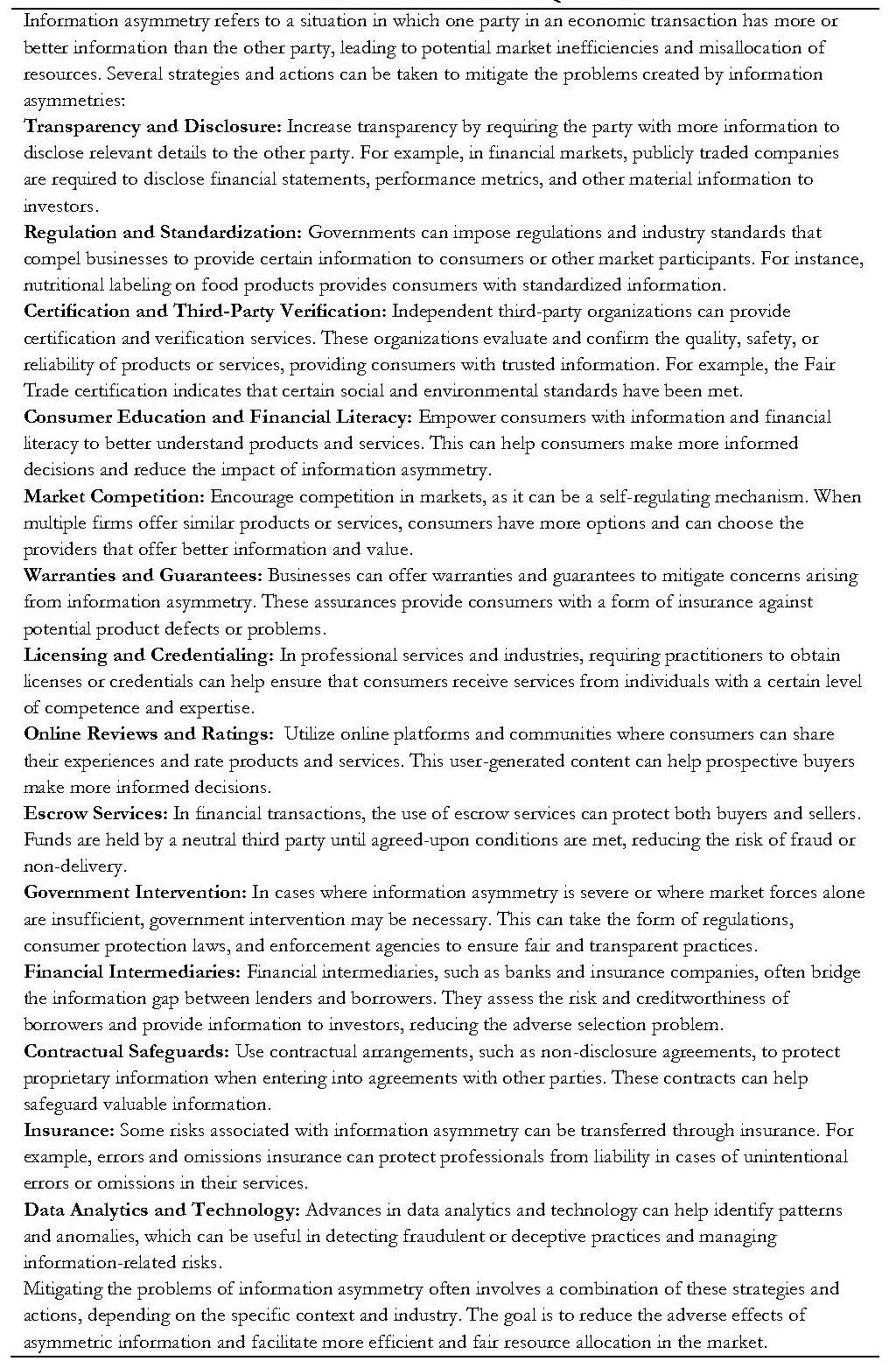

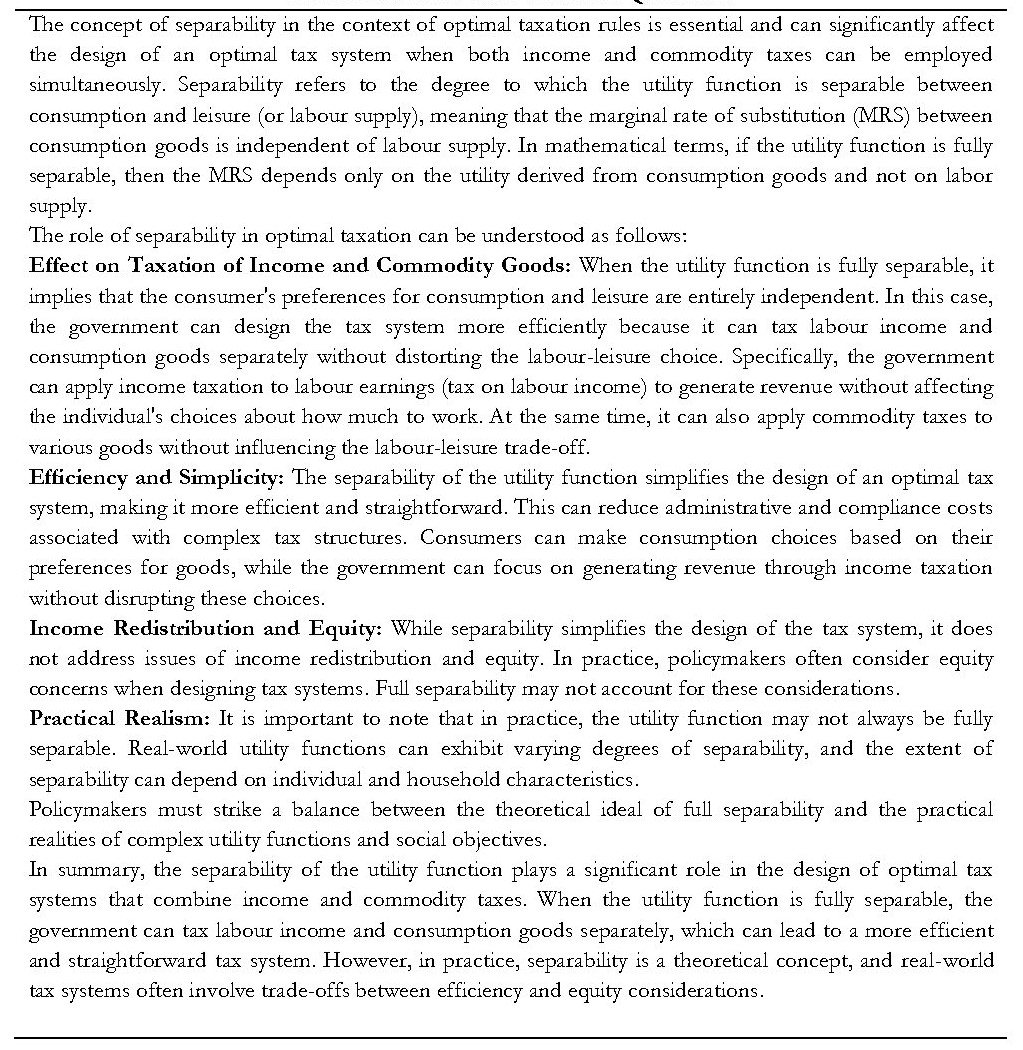

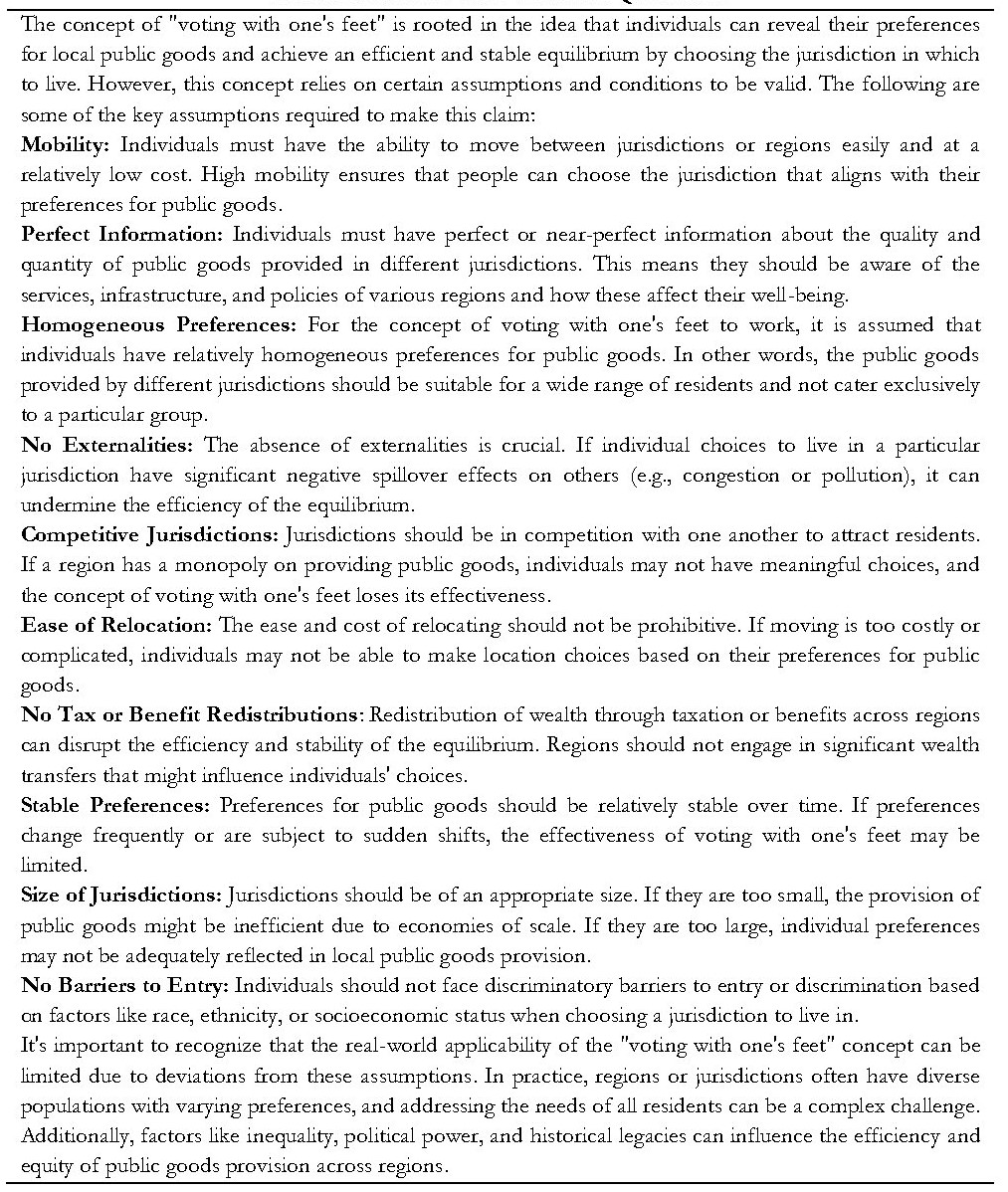

Table A4: ChatGPT-3.5 answer to Question 4

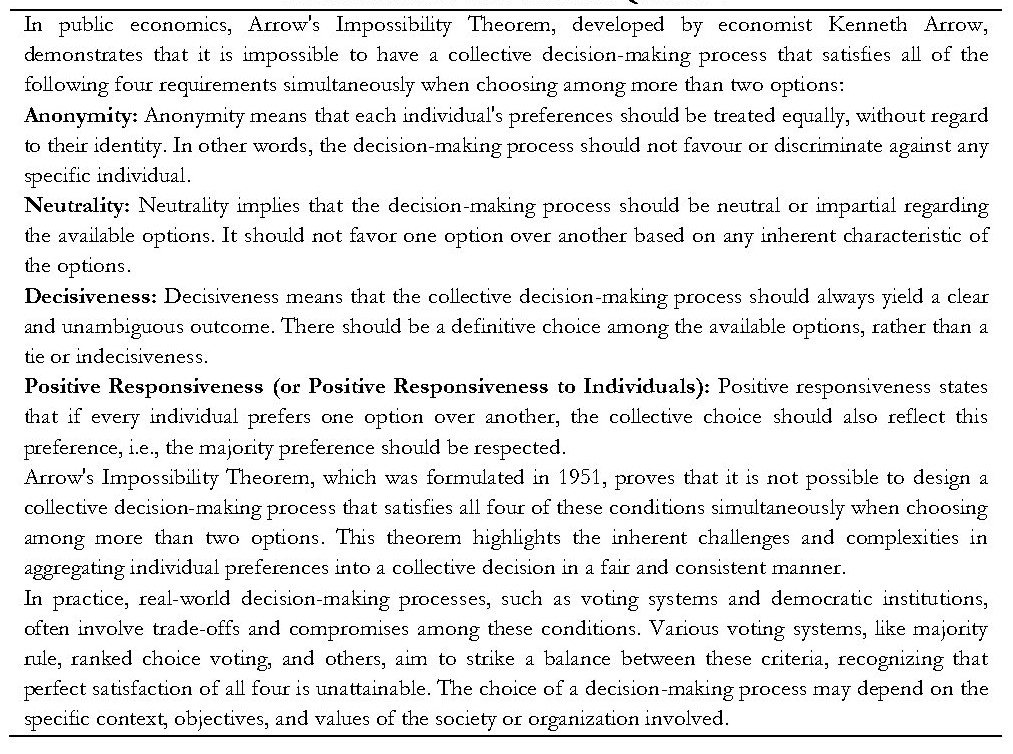

Table A5: ChatGPT-3.5 answer to Question 5

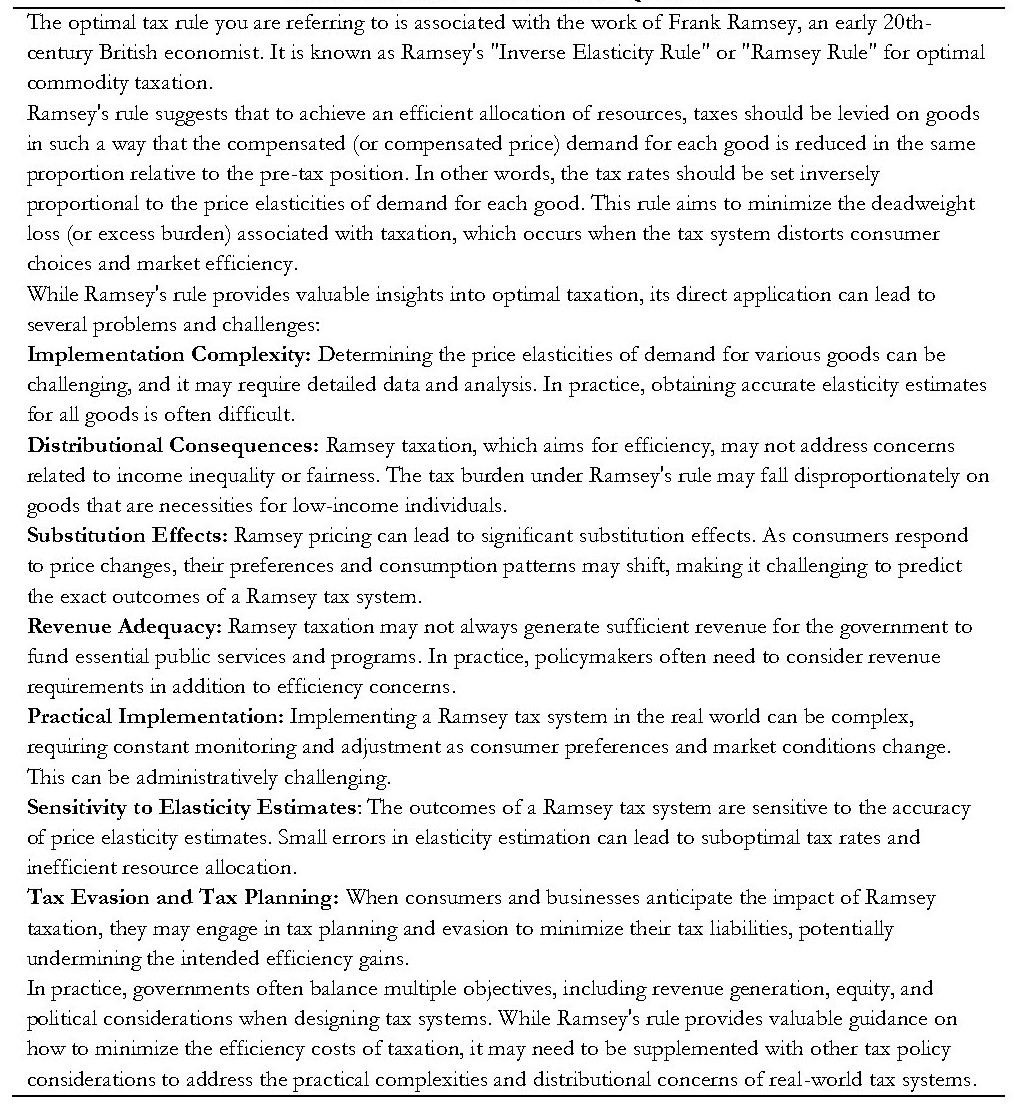

Table A6: ChatGPT-3.5 answer to Question 6

Table A7: ChatGPT-3.5 answer to Question 7

Table A8: ChatGPT-3.5 answer to Question 8

Table A9: ChatGPT-3.5 answer to Question 9

Table A10: ChatGPT-3.5 answer to Question 10

Table A11: ChatGPT-3.5 answer to Exercise 1

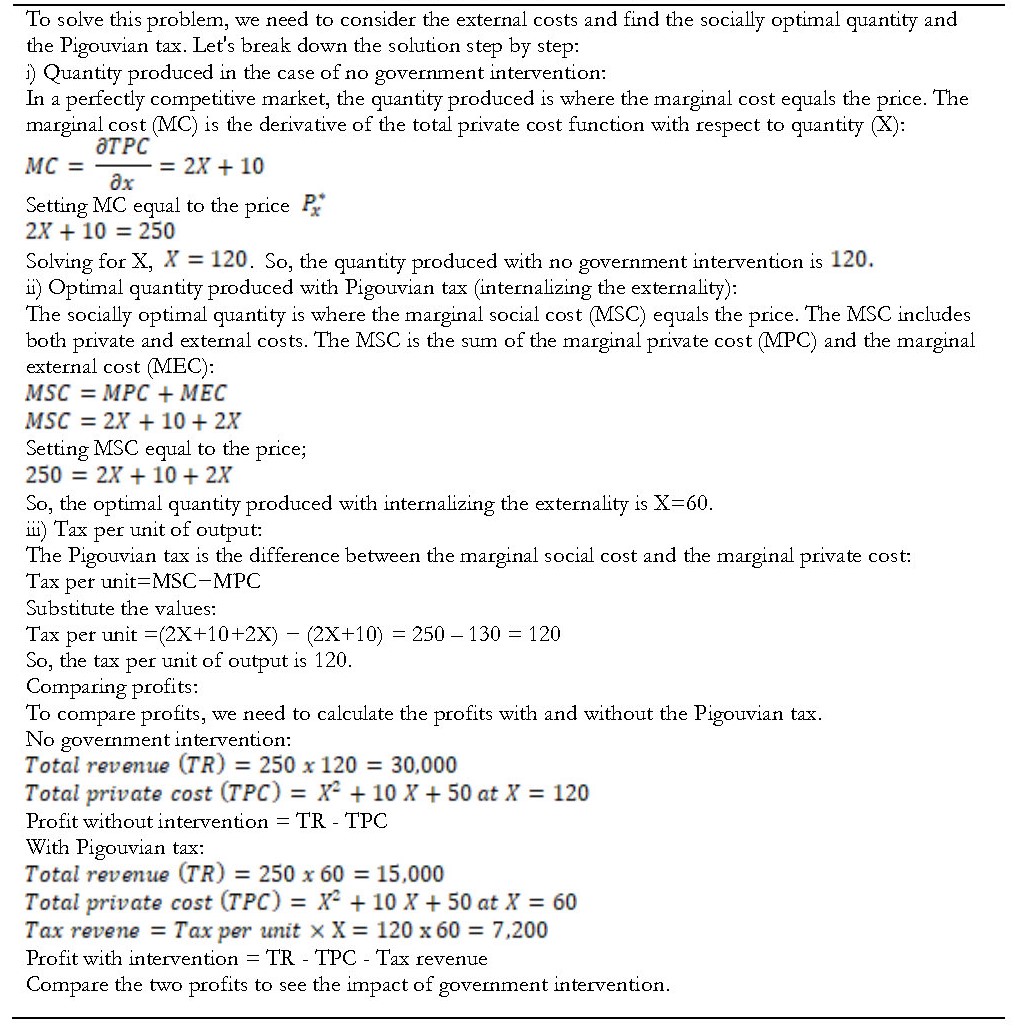

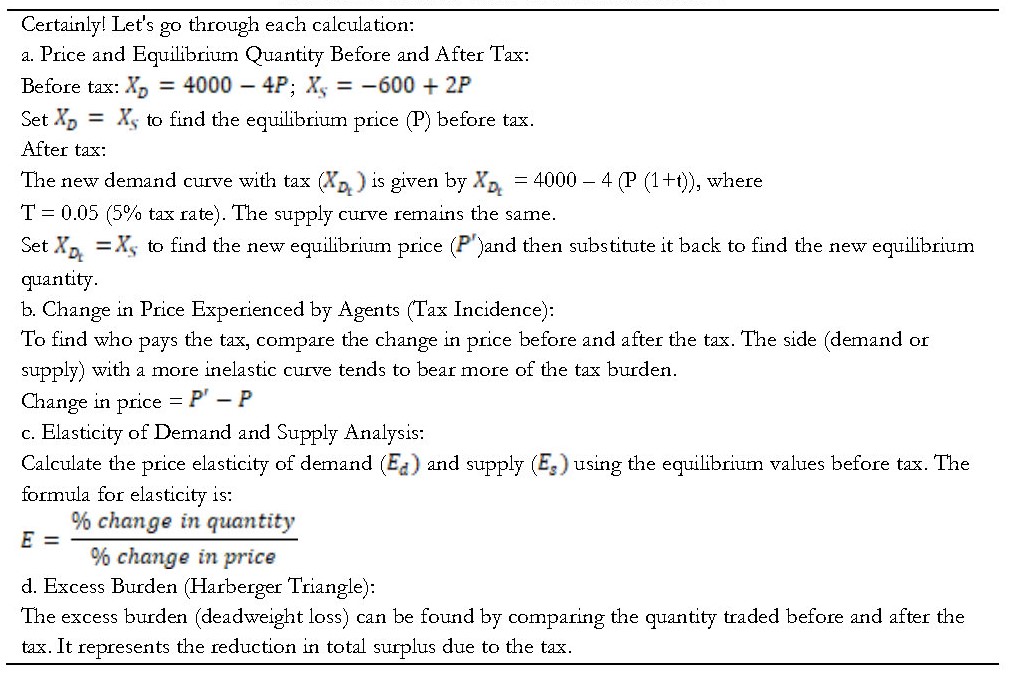

Table A12: ChatGPT-3.5 answer to Exercise 2

Table A13: ChatGPT-3.5 answer to Exercise 3

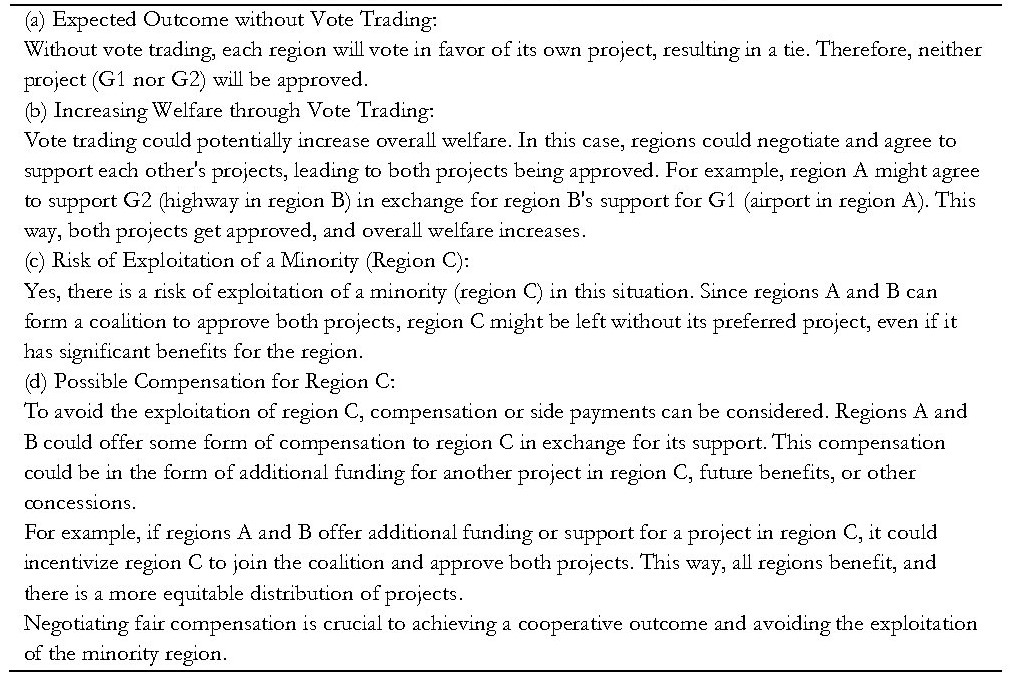

Table A14: ChatGPT-3.5 answer to Exercise 4

APPENDIX B

The explanations justifying the assessment are given in tables B1 to B14.

Table B1: Assessment of question 1

Table B2: Assessment of question 2

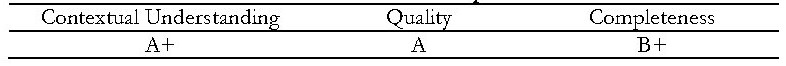

Table B3: Assessment of question 3

Table B4: Assessment of question 4

Table B5: Assessment of question 5

Table B6. Assessment of question 6

Table B7: Assessment of question 7

Table B8: Assessment of question 8

Table B9: Assessment of question 9

Table B10: Assessment of question 10

Table B11. Assessment of Exercise 1

Table B12: Assessment of Exercise 2

Table B13. Assessment of Exercise 3

Table B14. Assessment of Exercise 4